C++-Python/Grafana - Design of a simple network distributed log collector

Requirements

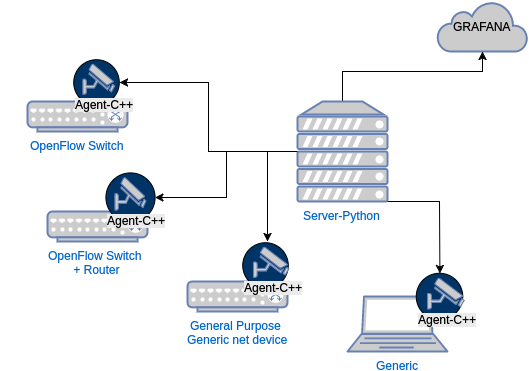

Create a client-server application in order to monitor network nodes and centrally collect reports and logs.

Server Requirements

Functional

- The system must allow the monitoring of events and configurations of nodes (events to be detected) through a single centre.

- The server must be the controller of the entire network.

- The information received from the agents must be periodically updated (polling)

-

- Optional: The communication must be real time, continuous.

Not Functional

- Communication with agents must be via GPRC.

-

- Optional: The system must interface with Grafana services (https://grafana.com/)

- The server must be able to be easily adapted to other platforms or libraries for log management, the use of a high-level language such as Python is reasonable.

Client Agent requirements

Functional

- The agent must monitor network events on the node, such as malfunctions.

- The agent must be able to provide the current network configuration.

- The agent must make the node information available to the server.

Not Functional

- Since the nodes to be monitored could be simple openflow network switches, the agent is required to have minimal dependencies. Possibly the program must be written in C++ and be distributed precompiled.

- For the same reason as the previous requirement, the agent must not impact the performance of the node where it is installed.

- The agent must communicate with the server node via GRPC.

-

- Optional: The agent must be started during OSHI boot.

Event tracking functionality

Event notification (from Agents to Server)

As per requirement, the Agents are able to detect network events (malfunctions or anomalies) and notify them to the Server.

By event, generically, we mean the realization of a boolean condition on a parameter to be analyzed (for example, in the case of an arp poisoning attack, the size of the arp table would be greater than the normal values).

If the system used ad-hoc and hard-coded solutions for all the events to be monitored, it would be necessary to produce different modules for each parameter to be monitored, in addition the Agent would have to be recompiled from time to time in case of adding a type event to analyze.

Our system instead offers a single versatile API to register an event detector on the Agent. In this way, in addition to reducing the complexity of the Agent, we allow the server to register listeners for new events, without the need to recompile or restart the Agents. The Agent only has to provide the necessary structure to allow the event listeners, required by the server, to communicate with it.

Structure of the Event

The event was modeled with the following attributes:

- id = id of the event type, id associated with the Agent listener.

- polling interval = interval of time in which to check the condition of the event.

- command = bash script to run on the client

- comparator = it is used to compare the result of the command with the expected result.

- expected result = what is expected to return the command not to consider the event as triggered, it can be constituted by a threshold or a value (both decimal and string).

- absolute/relative = the value must be considered as absolute or as the difference of the value at the previous iteration (in the case of integers). For example, the event condition on packets lost is to be understood as relative, given by the number of packets lost per iteration, and not in an absolute way (since the command returns the absolute number of packets lost since startup).

- decimal result = boolean which identifies the type of command result (string or decimal) in order to make or not the conversion before the comparison.

Comparator

An id : comparator type mapping is defined (e.g. 1 : =), as standard for both the client and the server. To the left we have an id and to the right a boolean operator. The command executed by the client is made to return a single result for make sure that the latter is compared with the expected result. To prevent a mismatch between the command result performed by the client and the real type on which the comparison will be made, it was thought to differentiate the equality operation for string and integer.

Comparator table

| id | comparator | description |

|---|---|---|

| 1 | > | If the return value of the command is greater than the expected value, the event is triggered |

| 2 | >= | If the return value of the command is greater than the expected value, the event is triggered |

| 3 | < | If the return value of the command is less than the expected value, the event is triggered |

| 4 | <= | If the return value of the command is less than the expected value, the event is triggered |

| 5 | == | If the return value of the command is equal to the expected value, the event is triggered |

| 6 | != | If the return value of the command is different from the expected value, the event is triggered |

Events

| id | command | interval| comparator | type | expected result | Description | ————- |:————-:|:————-:|:— ———-:|:—–:|:—–:|:——:| | 1 | nstat –zero PIPE grep ‘TcpOutRsts’ PIPE awk ‘{ print $2 }’ | 1s | > | Absolute |100| If the number of TCP packets containing the Reset flag (TcpOutRsts) is too high, the event is signaled (a port scan may be the cause) | | 2 | netstat -n -q -p 2>/dev/null PIPE grep SYN_REC PIPE wc -l | 10s | > | Relative | 300 | If the number of tcp connections in the Syn Rec phase is too high, a dos or ddos attack may be in progress | | 3 | who PIPE wc -l | 10s | > | Relative | 0 | If the number of connected users changes, the event is logged | | 0 | - | - | - | - | - | Error executing a command |

Implementation architecture

Listener API

- The server allows you to add listeners to clients for monitoring events.

- The server allows you to remove listeners from clients for monitoring events.

- The server allows you to set up a stream-type connection with clients for receiving notifications.

Servers

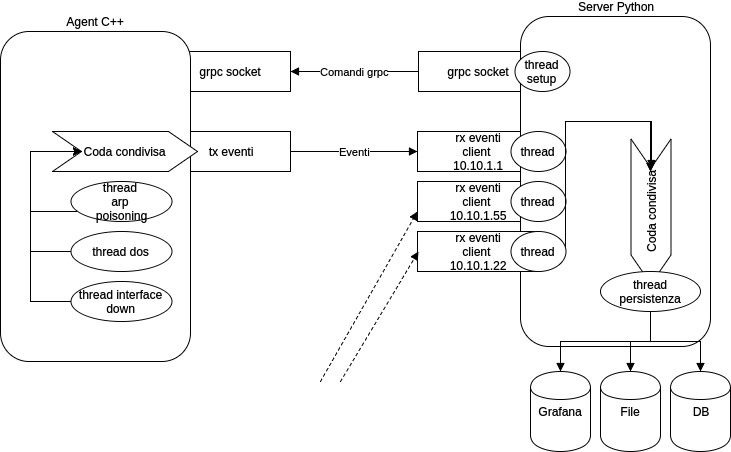

The server maintains a connection with each Agent, with which it receives (in polling or in real time) notifications of events monitored by the Agent. The communication with the Agents is initiated by the Server in such a way that the ascertainment of the unattainability of the node is immediate.

Once events have been received from the Agents, the Server (using a special thread so as not to slow down the operations for receiving events) saves the events in the database or on the service chosen for data permanence. Each event is marked primarily by the type of listener that detected it, the value returned by the command, and the time of day.

Agent

The basic client has a thread dedicated to transmitting the monitored events to the Server. Once the Server has invoked adding a listener for an event via grpc, a thread is created on the agent to monitor the chosen event. Once an event is detected, it is shared via a shared data structure (queue) with the thread that manages event transmission. The server also has the ability to “turn off” any listeners on the clients.

Safety

Given that the Agents allow the execution of commands remotely, the risk of exploiting these APIs for malicious purposes should not be underestimated. For this purpose, an authentication of grpc based on certificates and private keys has been introduced. To create certificates, use the script in /auth/generate_auth.sh or with the installation of the Agent client environment.

The system requires a key/certificate for the Agents, and a key/certificate for the server. For simplicity, we have chosen, as an example, to set up the system to assign the same private key/certificate to each client, in order to simplify the setup operations. The system is ready, however, it is predisposed to use different keys for each client.

Server installation in Python

Python Environment Configuration

|

|

install via pip grpc and protobuf.

HelloWorld

Before starting the HelloWorld in Python, you need to start the helloworld in cpp agent-cpp.

|

|

This way the .proto files will also compile for python. In the end:

|

|

The server, from grpc’s view, will in fact assume the role of client (as it requires the agent to execute commands).

If both components of helloworld worked, a “Hello” message will be returned.

Running Server

|

|

It will compile the project .proto files and start the python server.

Tutorials and References

It is important to follow the grpc guide: https://grpc.io/docs/languages/python/basics/

Server Requirements

Functional

- The system must allow monitoring of events and configurations of nodes through a single center.

- The server must be the controller of the entire network.

- The information received from the agents must be periodically updated (polling)

-

- Optional: The communication must be real time, continuous.

Not Functional

- Communication with agents must be via GPRC.

-

- Optional: The system must interface with Grafana services https://grafana.com/

- The server must be able to be easily adapted to other platforms or libraries for log management, the use of a high-level language such as Python is reasonable.

Installing C++ clients

Setup

Environment Configuration for C++ Client

This step is essential to be able to compile code in cpp. It will take a long time to compile grpc (about 10 minutes on an i7 7700K).

|

|

It will install the necessary packages, clone the gprc repository to gprc/gprc/, and compile these libraries. Also install protobuf.

Environment setup for deprecated systems

In case of old systems (with gcc < 4.6 for example) it is mandatory to use the appropriate setup script:

|

|

This mode is required for Oshi VM 8 virtual machine.

Source compilation

After configuring the environment to compile in cpp

|

|

HelloWorld

To verify that your system is operational, try helloworld to grpc.

|

|

The agent, from grpc’s point of view, will in fact assume the role of server (as it exposes a network port and executes the commands requested by the client, in our case the python server).

Once the server has started, starting the corresponding helloworld in the server folders of the repository you will see the python program returning the response to the HelloWorld sent by the program in CPP.

Run Agent

|

|

Tutorials and References

It is important to read https://grpc.io/docs/languages/cpp/quickstart/

Client Agent requirements

Functional

- The agent must monitor network events on the node, such as malfunctions.

- The agent must be able to provide the current network configuration.

- The agent must make the node information available to the server.

Not Functional

- Since the nodes to be monitored could be simple openflow network switches, the agent is required to have minimal dependencies. Possibly the program must be written in C++ and be distributed precompiled.

- For the same reason as the previous requirement, the agent must not impact the performance of the node where it is installed.

- The agent must communicate with the server node via GRPC.

-

- Optional: The agent must be started during OSHI boot.

Authors

A.F. and A.G.

Repositories

The full source code repository is publicly available on GitLab: